LookAhead

Digital voice-activated assistant that describes objects to the blind using AdHawk eyetracking glasses.

Python, Google Vision AI, OpenCV, PyAudio, SpeechRecognition

I'm a first-year undergraduate in computer science at the University of Toronto working on computational linguistics research with Prof. Yang Xu.

Previously, I've worked with David Martin and Dr. Meaghan O'Reilly as an intern at the Sunnybrook Research Institute (SRI). I've also represented Canada three times at the International Linguistics Olympiad (IOL).

At Sunnybrook, I developed an automated method for vertebrae segmentation to accelerate focused ultrasound (FUS) treatment planning. Using convolutional neural networks (U-Net architecture), I adapted models trained on human CT scans for application in porcine experiments.

This approach reduced segmentation time from 3+ hours to minutes, enabling faster and more accurate treatment simulations. My work demonstrated how AI-driven medical imaging can streamline preclinical workflows and support the advancement of non-invasive therapies.

Slides from my final presentation are embedded below.

Digital voice-activated assistant that describes objects to the blind using AdHawk eyetracking glasses.

Python, Google Vision AI, OpenCV, PyAudio, SpeechRecognition

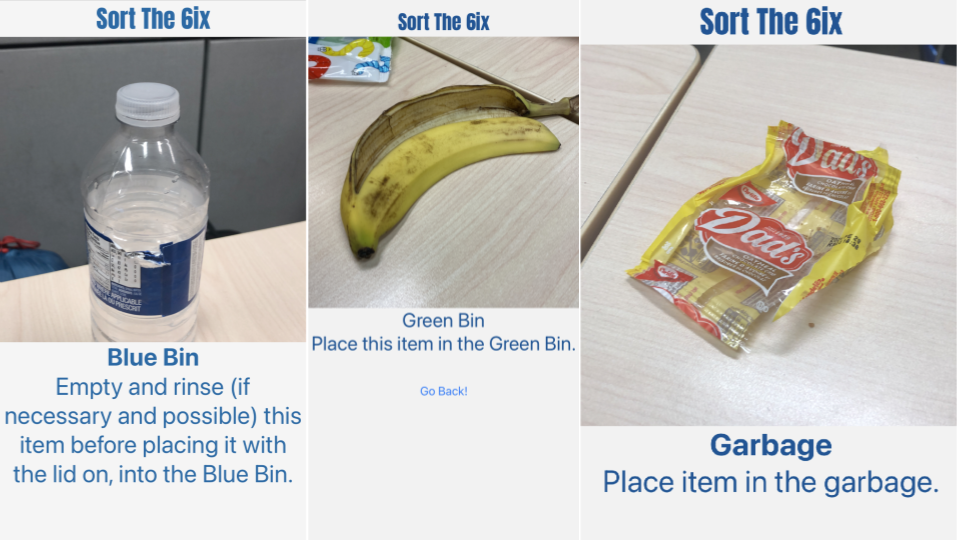

Mobile app that informs user how to dispose of waste based on a real-time photo.

Python, Flask, React Native, HuggingFace, Google Vision AI

Single-player children’s game where players learn how to survive a cold in three levels.

Java, Swing API

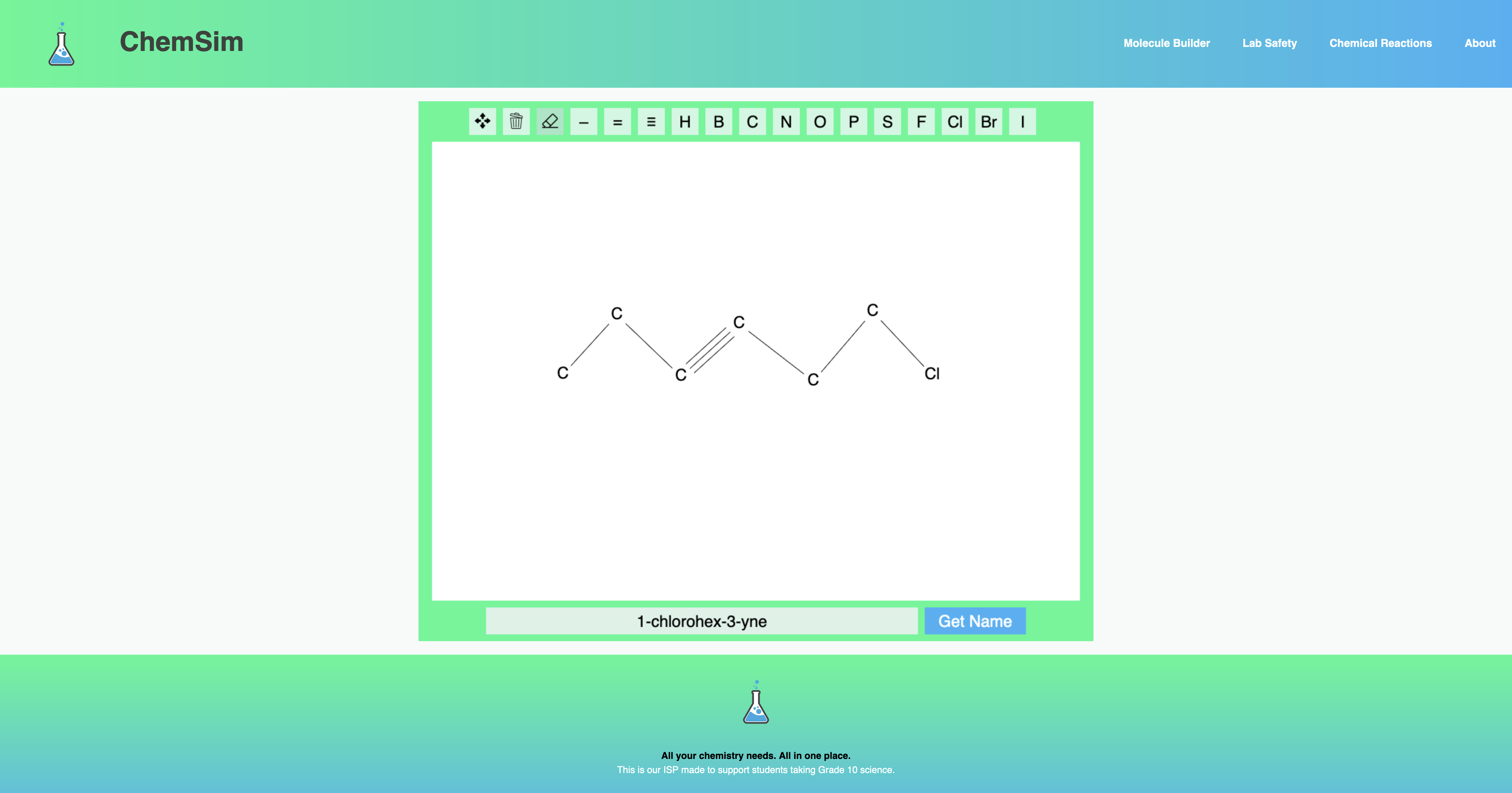

Website with a 2D organic molecule builder and IUPAC name generator to support chemistry students.

JavaScript, Canvas, Fetch API